Code

#pak::pkg_install('ellmer')

library(ellmer)

library(keyring)Tony D

March 18, 2025

A guide to interacting with online Large Language Models (LLMs) using R and Python, with examples for Google Gemini, local Ollama, and ChatGPT.

This document provides a comprehensive guide to interacting with online Large Language Models (LLMs) using both R and Python. It demonstrates how to use the ellmer and chattr packages in R, and the chatlas library in Python, to connect to various LLM services, including Google Gemini, locally hosted Ollama models, and ChatGPT. The guide covers setting up API keys, defining models, sending prompts, and processing responses for tasks like text generation and translation.

run LLM model online with ellmer or chatter

Login at https://platform.openai.com/

Goto Settings (gear icon on top right)

Find API Keys from menu on left

Follow the process to Create new secret key

Copy your secret key (it will only show once so make sure you copy it)

Do not use Copilot (GitHub) model for chattr(). Github will block this behavior.

Done!

.RProfile

#|eval: false

# Load chattr app after RStudio is fully loaded

setHook("rstudio.sessionInit", function(newSession) {

if (newSession) {

Sys.sleep(2) # Wait 2 seconds before starting chattr to ensure RStudio is ready

tryCatch({

library(chattr)

chattr_use("copilot")

#Sys.setenv("OPENAI_API_KEY" = "your-api-key-here")

chattr_defaults(prompt = "{readLines(system.file('prompt/base.txt', package = 'chattr'))}")

chattr_app(as_job = TRUE)

}, error = function(e)

message("Error starting chattr: ", e$message))

}

}, action = "append")https://github.com/posit-dev/chatlas

---

title: "调用网络端AI模型"

subtitle: "Run AI model online"

author: "Tony D"

date: "2025-03-18"

categories:

- AI

- R

- Python

image: "images/S__6840322.jpg"

execute:

warning: false

error: false

eval: false

---

A guide to interacting with online Large Language Models (LLMs) using R and Python, with examples for Google Gemini, local Ollama, and ChatGPT.

This document provides a comprehensive guide to interacting with online Large Language Models (LLMs) using both R and Python. It demonstrates how to use the `ellmer` and `chattr` packages in R, and the `chatlas` library in Python, to connect to various LLM services, including Google Gemini, locally hosted Ollama models, and ChatGPT. The guide covers setting up API keys, defining models, sending prompts, and processing responses for tasks like text generation and translation.

run LLM model online with ellmer or chatter

# 1.ellmer for R

```{r}

#pak::pkg_install('ellmer')

library(ellmer)

library(keyring)

```

## google gemini

### gemini-2.0-flash

```{r}

chat_gemini_model=chat_gemini(

system_prompt = NULL,

turns = NULL,

base_url = "https://generativelanguage.googleapis.com/v1beta/",

api_key = key_get("google_ai_api_key"),

model = "gemini-2.0-flash",

api_args = list(),

echo = NULL

)

chat_gemini_model

```

```{r}

chat_gemini_model$chat("Tell me three jokes about statisticians")

```

## ollama on local

### set up ollama local

```{r}

library(ollamar)

ollamar::pull("llama3.1")

```

```{r}

ollamar::list_models()

```

### difine model

```{r}

chat=chat_ollama(

system_prompt = NULL,

turns = NULL,

base_url = "http://localhost:11434",

model="llama3.1",

seed = NULL,

api_args = list(),

echo = NULL

)

chat$get_model()

```

### run LLM

```{r}

chat$chat("Tell me three jokes about statisticians")

```

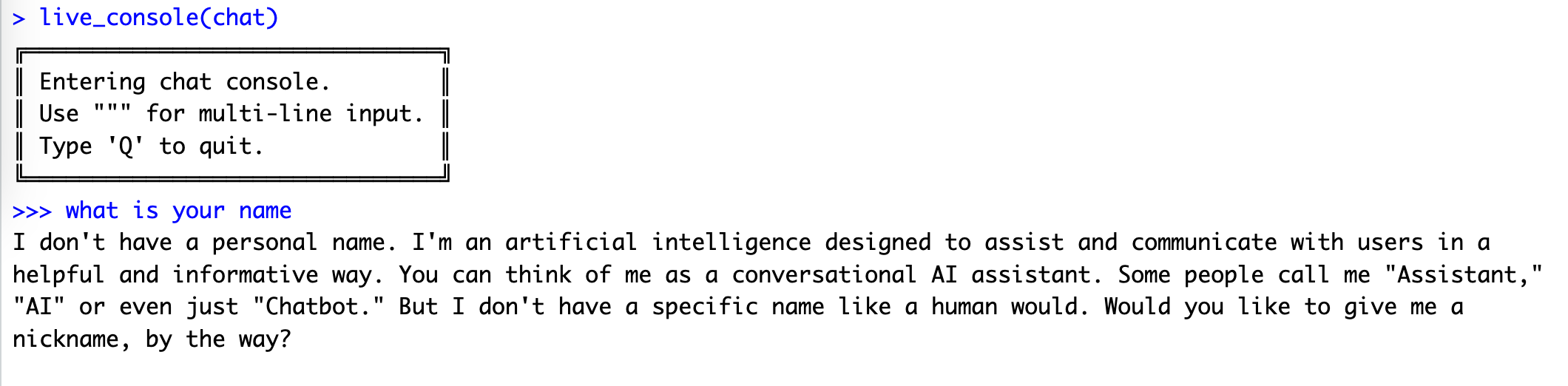

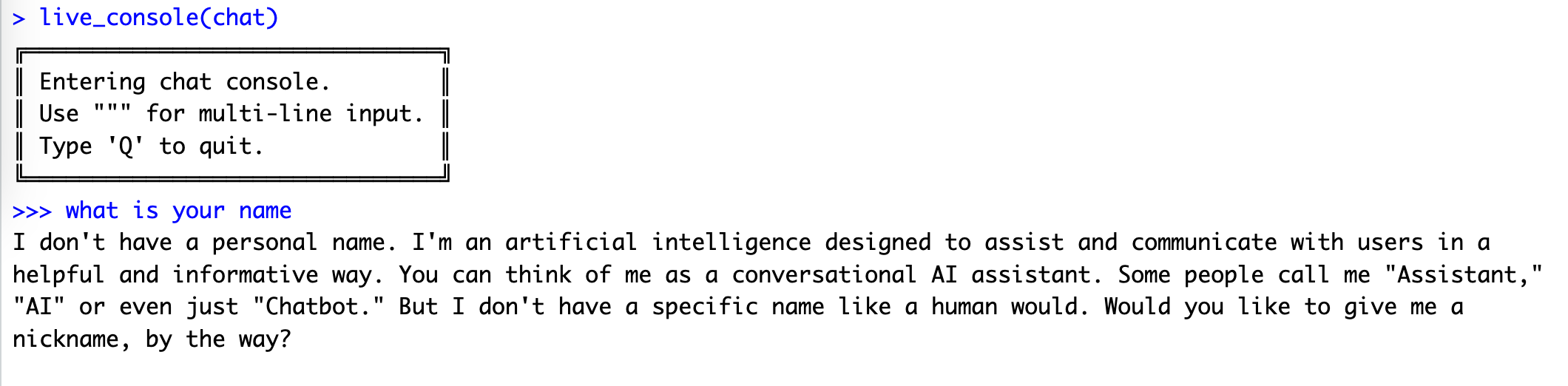

### run on console

```{r}

live_console(chat)

```

{width="700"}

### check token usage

```{r}

token_usage()

```

# 2. chattr LLM pacakge for R

## Step 1 Install package

```{r}

#remotes::install_github("mlverse/chattr")

```

```{r}

library(chattr)

```

## Step 2 set key

- Login at https://platform.openai.com/

- Goto Settings (gear icon on top right)

- Find API Keys from menu on left

- Follow the process to Create new secret key

- Copy your secret key (it will only show once so make sure you copy it)

```{r}

Sys.setenv(OpenAI_API_KEY="sk-xxxxxxxx")

```

## Step 3 run ChatGPT as background job

### select model

```{r}

#copilot do not need OpenAI_API_KEY

chattr_use("copilot")

```

### add prompt

```{r}

chattr_defaults(prompt = "{readLines(system.file('prompt/base.txt', package = 'chattr'))}")

```

### run ChatGPT as background jobs

Do not use Copilot (GitHub) model for chattr(). Github will block this behavior.

```{r}

# run

chattr_app(as_job = TRUE)

```

Done!

## Or setup auto open Chat GPT when Rstudio start

### Step 1 find Rprofile file

```{r}

#install.packages("usethis") # Install if not already installed

usethis::edit_r_profile()

```

### Step 2 edit Rprofile file as below

```{r filename='.RProfile'}

#|eval: false

# Load chattr app after RStudio is fully loaded

setHook("rstudio.sessionInit", function(newSession) {

if (newSession) {

Sys.sleep(2) # Wait 2 seconds before starting chattr to ensure RStudio is ready

tryCatch({

library(chattr)

chattr_use("copilot")

#Sys.setenv("OPENAI_API_KEY" = "your-api-key-here")

chattr_defaults(prompt = "{readLines(system.file('prompt/base.txt', package = 'chattr'))}")

chattr_app(as_job = TRUE)

}, error = function(e)

message("Error starting chattr: ", e$message))

}

}, action = "append")

```

# 3. google python API

```{bash}

pip install -q -U google-genai

```

```{python}

from google import genai

import keyring

client = genai.Client(api_key=keyring.get_password("system", "google_ai_api_key"))

response = client.models.generate_content(

model="gemini-2.0-flash", contents="Explain how AI works in a few words"

)

print(response.text)

```

# chatlas for python

https://github.com/posit-dev/chatlas

```{bash}

pip install -U chatlas

```

```{python}

from chatlas import ChatGoogle

from chatlas import ChatOllama

from chatlas import token_usage

import keyring

```

## gemini model

```{python}

from chatlas import ChatGoogle

chat_google_model = ChatGoogle(

model = "gemini-2.0-flash",

api_key=keyring.get_password("system", "google_ai_api_key"),

system_prompt = "You are a whisky expert",

)

chat_google_model

```

```{python}

chat_google_model.chat("translate following whisky tasting note to English:微酸,脏麦芽。菲特肯还是要找1988")

```

## local Ollama model

```{python}

from chatlas import ChatOllama

chat_llama_model = ChatOllama(

model="llama3.2",

#api_key=keyring.get_password("system", "google_ai_api_key"),

system_prompt = "You are a whisky expert",

)

chat_llama_model

```

```{python}

chat_llama_model.chat("translate following whisky tasting note to English:微酸,脏麦芽。菲特肯还是要找1988")

```

```{python}

token_usage()

```