Code

from google import genai

import keyring

from PIL import Image

import torchvision.transforms as T

from torchvision.transforms.functional import InterpolationMode

import sys

import pickle

import math

import numpy as np

import torchTony D

April 21, 2025

A demonstration of how to perform OCR using both online (Gemini 2.5) and offline (InternVL3 1B) AI models, with Python code examples for text extraction from images in both English and Chinese.

This document demonstrates how to perform Optical Character Recognition (OCR) using both online and offline AI models. It provides Python code examples for extracting text from images in both English and Chinese using the Gemini 2.5 API. Additionally, it includes instructions and code for setting up and using the InternVL3 1B model locally, including functions for image preprocessing and model splitting. This guide is a valuable resource for anyone looking to implement OCR in their projects.

with Gemini 2.5 online/InternVL3 offline

List models

https://huggingface.co/OpenGVLab/InternVL3-8B-hf

If using better model will increase accuracy

testing

IMAGENET_MEAN = (0.485, 0.456, 0.406)

IMAGENET_STD = (0.229, 0.224, 0.225)

def build_transform(input_size):

MEAN, STD = IMAGENET_MEAN, IMAGENET_STD

transform = T.Compose([

T.Lambda(lambda img: img.convert('RGB') if img.mode != 'RGB' else img),

T.Resize((input_size, input_size), interpolation=InterpolationMode.BICUBIC),

T.ToTensor(),

T.Normalize(mean=MEAN, std=STD)

])

return transform

def find_closest_aspect_ratio(aspect_ratio, target_ratios, width, height, image_size):

best_ratio_diff = float('inf')

best_ratio = (1, 1)

area = width * height

for ratio in target_ratios:

target_aspect_ratio = ratio[0] / ratio[1]

ratio_diff = abs(aspect_ratio - target_aspect_ratio)

if ratio_diff < best_ratio_diff:

best_ratio_diff = ratio_diff

best_ratio = ratio

elif ratio_diff == best_ratio_diff:

if area > 0.5 * image_size * image_size * ratio[0] * ratio[1]:

best_ratio = ratio

return best_ratio

def dynamic_preprocess(image, min_num=1, max_num=12, image_size=448, use_thumbnail=False):

orig_width, orig_height = image.size

aspect_ratio = orig_width / orig_height

# calculate the existing image aspect ratio

target_ratios = set(

(i, j) for n in range(min_num, max_num + 1) for i in range(1, n + 1) for j in range(1, n + 1) if

i * j <= max_num and i * j >= min_num)

target_ratios = sorted(target_ratios, key=lambda x: x[0] * x[1])

# find the closest aspect ratio to the target

target_aspect_ratio = find_closest_aspect_ratio(

aspect_ratio, target_ratios, orig_width, orig_height, image_size)

# calculate the target width and height

target_width = image_size * target_aspect_ratio[0]

target_height = image_size * target_aspect_ratio[1]

blocks = target_aspect_ratio[0] * target_aspect_ratio[1]

# resize the image

resized_img = image.resize((target_width, target_height))

processed_images = []

for i in range(blocks):

box = (

(i % (target_width // image_size)) * image_size,

(i // (target_width // image_size)) * image_size,

((i % (target_width // image_size)) + 1) * image_size,

((i // (target_width // image_size)) + 1) * image_size

)

# split the image

split_img = resized_img.crop(box)

processed_images.append(split_img)

assert len(processed_images) == blocks

if use_thumbnail and len(processed_images) != 1:

thumbnail_img = image.resize((image_size, image_size))

processed_images.append(thumbnail_img)

return processed_images

def load_image(image_file, input_size=448, max_num=12):

image = Image.open(image_file).convert('RGB')

transform = build_transform(input_size=input_size)

images = dynamic_preprocess(image, image_size=input_size, use_thumbnail=True, max_num=max_num)

pixel_values = [transform(image) for image in images]

pixel_values = torch.stack(pixel_values)

return pixel_values

def split_model(model_name):

device_map = {}

world_size = torch.cuda.device_count()

config = AutoConfig.from_pretrained(model_path, trust_remote_code=True)

num_layers = config.llm_config.num_hidden_layers

# Since the first GPU will be used for ViT, treat it as half a GPU.

num_layers_per_gpu = math.ceil(num_layers / (world_size - 0.5))

num_layers_per_gpu = [num_layers_per_gpu] * world_size

num_layers_per_gpu[0] = math.ceil(num_layers_per_gpu[0] * 0.5)

layer_cnt = 0

for i, num_layer in enumerate(num_layers_per_gpu):

for j in range(num_layer):

device_map[f'language_model.model.layers.{layer_cnt}'] = i

layer_cnt += 1

device_map['vision_model'] = 0

device_map['mlp1'] = 0

device_map['language_model.model.tok_embeddings'] = 0

device_map['language_model.model.embed_tokens'] = 0

device_map['language_model.output'] = 0

device_map['language_model.model.norm'] = 0

device_map['language_model.model.rotary_emb'] = 0

device_map['language_model.lm_head'] = 0

device_map[f'language_model.model.layers.{num_layers - 1}'] = 0

return device_map

---

title: "AI图片识别文字"

subtitle: "AI Optical character recognition"

author: "Tony D"

date: "2025-04-21"

categories:

- AI

- R

- Python

execute:

warning: false

error: false

image: 'images/001.png'

---

A demonstration of how to perform OCR using both online (Gemini 2.5) and offline (InternVL3 1B) AI models, with Python code examples for text extraction from images in both English and Chinese.

This document demonstrates how to perform Optical Character Recognition (OCR) using both online and offline AI models. It provides Python code examples for extracting text from images in both English and Chinese using the Gemini 2.5 API. Additionally, it includes instructions and code for setting up and using the InternVL3 1B model locally, including functions for image preprocessing and model splitting. This guide is a valuable resource for anyone looking to implement OCR in their projects.

with Gemini 2.5 online/InternVL3 offline

# Using Gemini 2.5 online

```{python}

from google import genai

import keyring

from PIL import Image

import torchvision.transforms as T

from torchvision.transforms.functional import InterpolationMode

import sys

import pickle

import math

import numpy as np

import torch

```

```{python}

#| echo: false

import pickle

f = open('store.pckl', 'rb')

response_gemini_en,response_gemini,response_en,response = pickle.load(f)

f.close()

```

```{python}

#| eval: false

client = genai.Client(api_key=keyring.get_password("system", "google_ai_api_key"))

```

List models

```{python}

#| eval: false

print("List of models:\n")

for m in client.models.list():

for action in m.supported_actions:

# if action == "generateContent":

print(m.name+" "+ action)

```

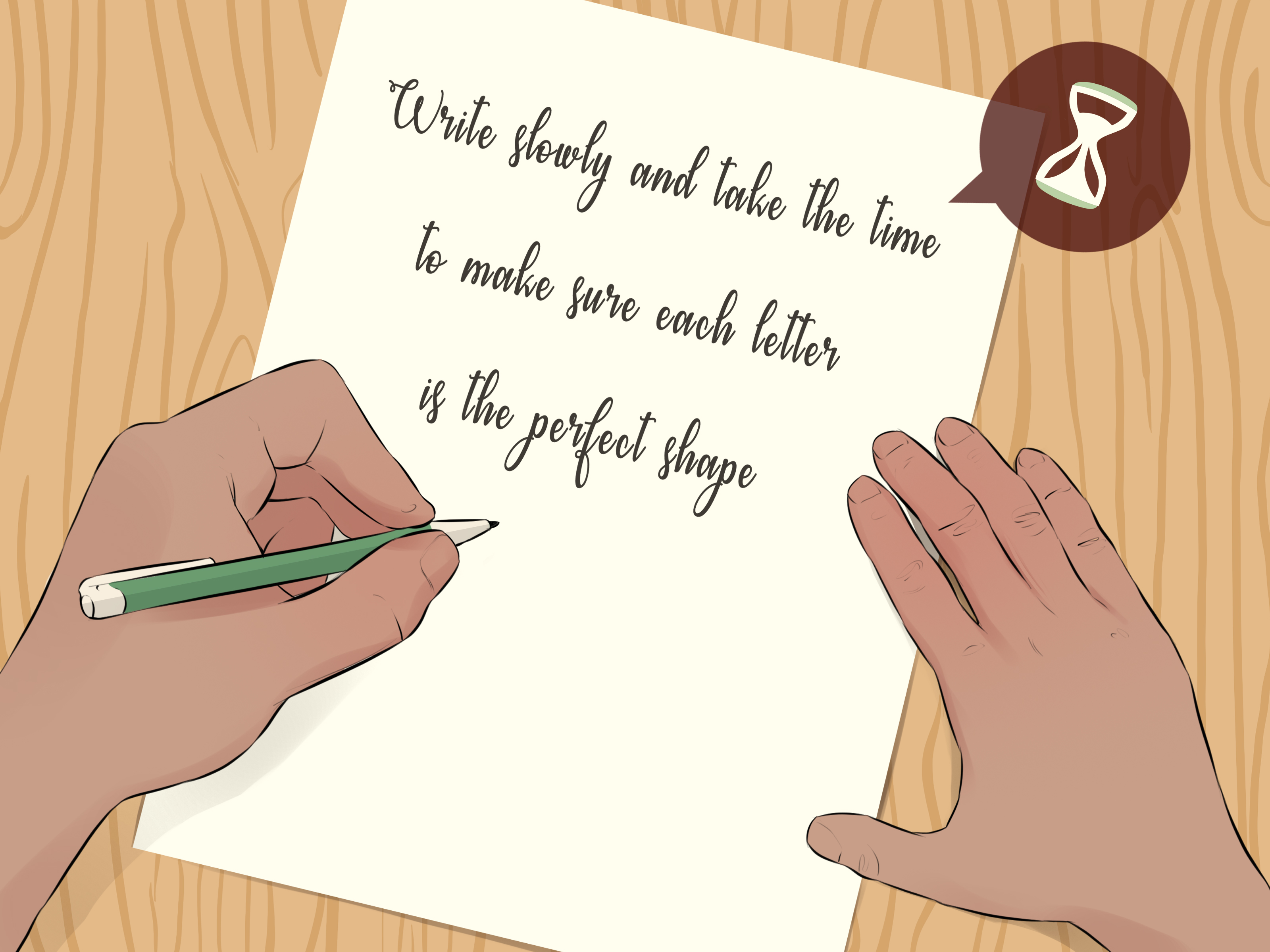

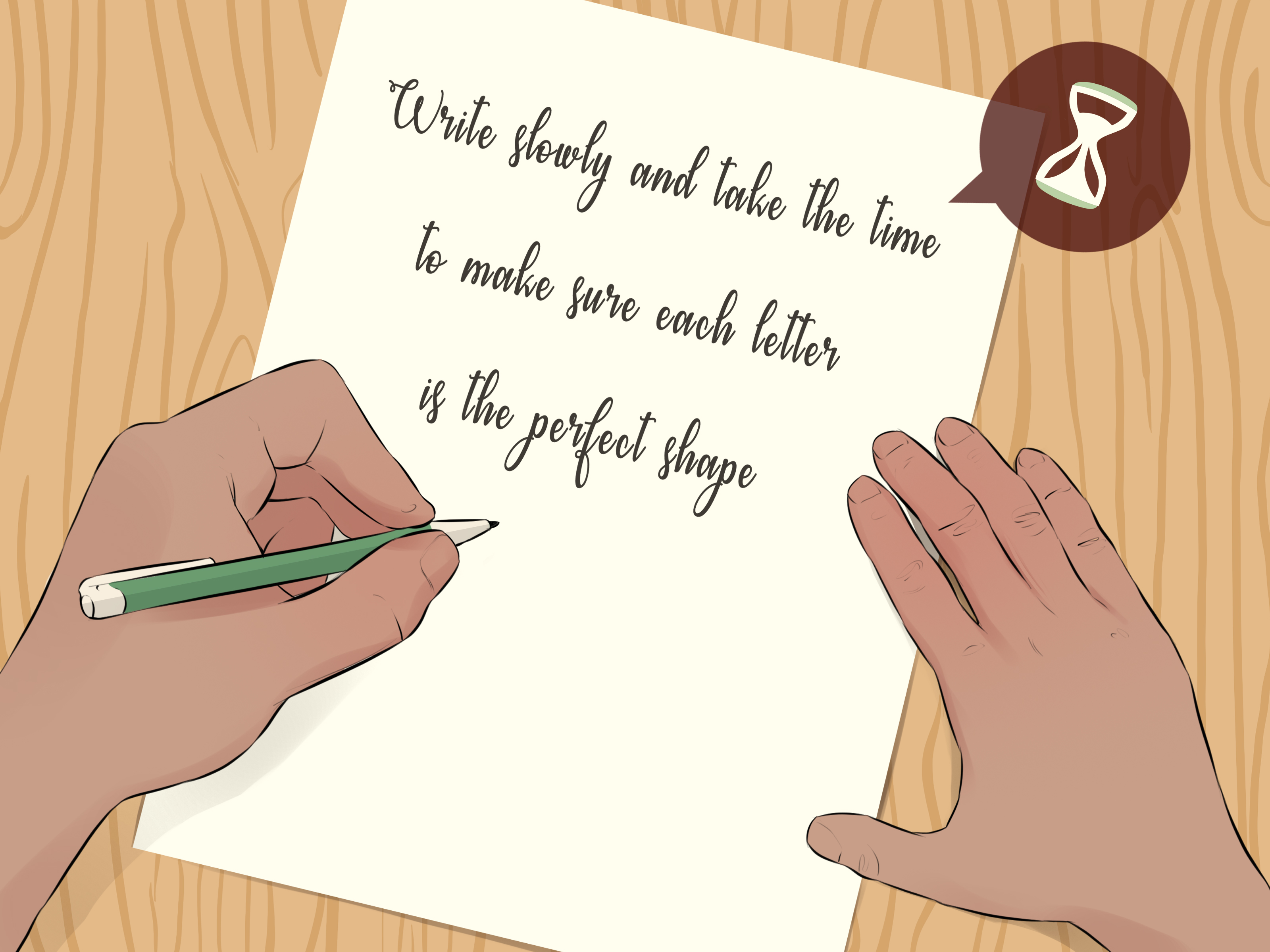

## English Extract

```{python}

#| eval: false

image = Image.open("images/english.jpg")

response_gemini_en = client.models.generate_content(

model="gemini-2.5-pro-exp-03-25",

contents=[image, "Extract text from image"])

```

```{python}

print(response_gemini_en.text)

```

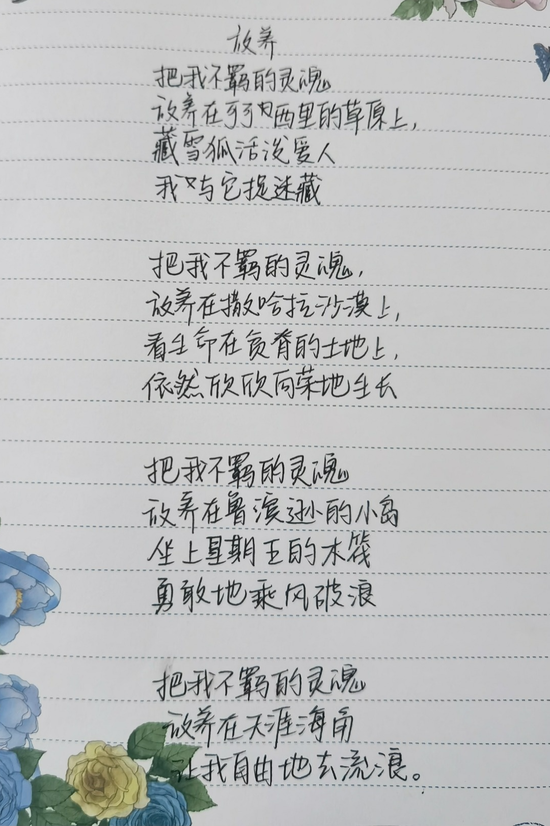

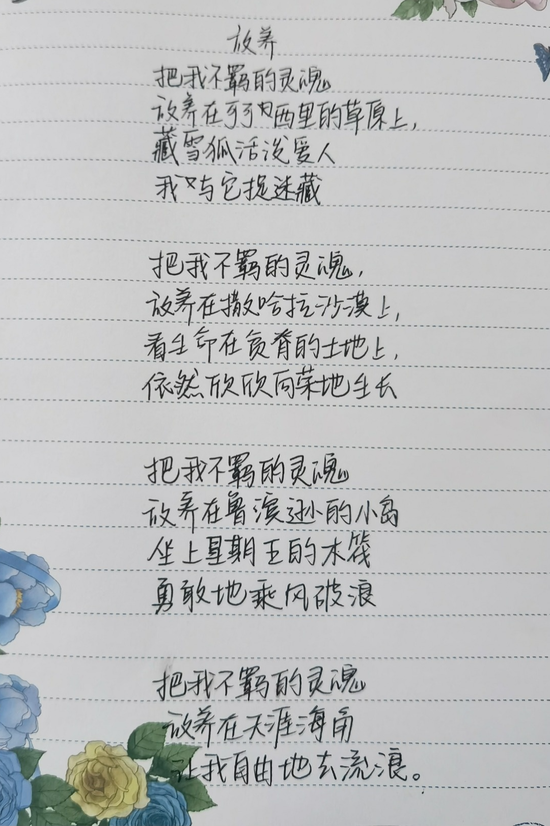

## Chinese Extract

```{python}

#| eval: false

image = Image.open("images/chinese.png")

response_gemini = client.models.generate_content(

model="gemini-2.5-pro-exp-03-25",

contents=[image, "提取图上的文字"])

```

```{python}

print(response_gemini.text)

```

# Using InternVL3 1B model offline

https://huggingface.co/OpenGVLab/InternVL3-8B-hf

If using better model will increase accuracy

```{python}

#| eval: false

print(sys.version)

```

```{python}

#| eval: false

pip install --upgrade transformers

pip install einops timm

pip install -U bitsandbytes

```

```{python}

#| eval: false

import os

os.system('pip show transformers')

```

```{python}

#| eval: false

import torch

from transformers import AutoTokenizer, AutoModel,pipeline

path = "OpenGVLab/InternVL3-1B"

model = AutoModel.from_pretrained(path,torch_dtype=torch.bfloat16,trust_remote_code=True).eval()

tokenizer = AutoTokenizer.from_pretrained(path, trust_remote_code=True)

```

```{python}

generation_config = dict(max_new_tokens=1024, do_sample=True)

```

testing

```{python}

#| eval: false

question = 'Hello, who are you?'

response, history = model.chat(tokenizer, None, question, generation_config, history=None, return_history=True)

print(f'User: {question}\nAssistant: {response}')

```

## single-image single-round conversation (单图单轮对话)

```{python}

#| eval: false

IMAGENET_MEAN = (0.485, 0.456, 0.406)

IMAGENET_STD = (0.229, 0.224, 0.225)

def build_transform(input_size):

MEAN, STD = IMAGENET_MEAN, IMAGENET_STD

transform = T.Compose([

T.Lambda(lambda img: img.convert('RGB') if img.mode != 'RGB' else img),

T.Resize((input_size, input_size), interpolation=InterpolationMode.BICUBIC),

T.ToTensor(),

T.Normalize(mean=MEAN, std=STD)

])

return transform

def find_closest_aspect_ratio(aspect_ratio, target_ratios, width, height, image_size):

best_ratio_diff = float('inf')

best_ratio = (1, 1)

area = width * height

for ratio in target_ratios:

target_aspect_ratio = ratio[0] / ratio[1]

ratio_diff = abs(aspect_ratio - target_aspect_ratio)

if ratio_diff < best_ratio_diff:

best_ratio_diff = ratio_diff

best_ratio = ratio

elif ratio_diff == best_ratio_diff:

if area > 0.5 * image_size * image_size * ratio[0] * ratio[1]:

best_ratio = ratio

return best_ratio

def dynamic_preprocess(image, min_num=1, max_num=12, image_size=448, use_thumbnail=False):

orig_width, orig_height = image.size

aspect_ratio = orig_width / orig_height

# calculate the existing image aspect ratio

target_ratios = set(

(i, j) for n in range(min_num, max_num + 1) for i in range(1, n + 1) for j in range(1, n + 1) if

i * j <= max_num and i * j >= min_num)

target_ratios = sorted(target_ratios, key=lambda x: x[0] * x[1])

# find the closest aspect ratio to the target

target_aspect_ratio = find_closest_aspect_ratio(

aspect_ratio, target_ratios, orig_width, orig_height, image_size)

# calculate the target width and height

target_width = image_size * target_aspect_ratio[0]

target_height = image_size * target_aspect_ratio[1]

blocks = target_aspect_ratio[0] * target_aspect_ratio[1]

# resize the image

resized_img = image.resize((target_width, target_height))

processed_images = []

for i in range(blocks):

box = (

(i % (target_width // image_size)) * image_size,

(i // (target_width // image_size)) * image_size,

((i % (target_width // image_size)) + 1) * image_size,

((i // (target_width // image_size)) + 1) * image_size

)

# split the image

split_img = resized_img.crop(box)

processed_images.append(split_img)

assert len(processed_images) == blocks

if use_thumbnail and len(processed_images) != 1:

thumbnail_img = image.resize((image_size, image_size))

processed_images.append(thumbnail_img)

return processed_images

def load_image(image_file, input_size=448, max_num=12):

image = Image.open(image_file).convert('RGB')

transform = build_transform(input_size=input_size)

images = dynamic_preprocess(image, image_size=input_size, use_thumbnail=True, max_num=max_num)

pixel_values = [transform(image) for image in images]

pixel_values = torch.stack(pixel_values)

return pixel_values

def split_model(model_name):

device_map = {}

world_size = torch.cuda.device_count()

config = AutoConfig.from_pretrained(model_path, trust_remote_code=True)

num_layers = config.llm_config.num_hidden_layers

# Since the first GPU will be used for ViT, treat it as half a GPU.

num_layers_per_gpu = math.ceil(num_layers / (world_size - 0.5))

num_layers_per_gpu = [num_layers_per_gpu] * world_size

num_layers_per_gpu[0] = math.ceil(num_layers_per_gpu[0] * 0.5)

layer_cnt = 0

for i, num_layer in enumerate(num_layers_per_gpu):

for j in range(num_layer):

device_map[f'language_model.model.layers.{layer_cnt}'] = i

layer_cnt += 1

device_map['vision_model'] = 0

device_map['mlp1'] = 0

device_map['language_model.model.tok_embeddings'] = 0

device_map['language_model.model.embed_tokens'] = 0

device_map['language_model.output'] = 0

device_map['language_model.model.norm'] = 0

device_map['language_model.model.rotary_emb'] = 0

device_map['language_model.lm_head'] = 0

device_map[f'language_model.model.layers.{num_layers - 1}'] = 0

return device_map

```

### English Extract

```{python}

#| eval: false

pixel_values = load_image('images/english.jpg').to(torch.bfloat16)

question = '<image>\nPlease Extract text from image'

response_en = model.chat(tokenizer, pixel_values, question, generation_config)

```

```{python}

print(response_en)

```

### Chinese Extract

```{python}

#| eval: false

pixel_values = load_image('images/chinese.png').to(torch.bfloat16)

question = '<image>\n提取图上的文字'

response = model.chat(tokenizer, pixel_values, question, generation_config)

```

```{python}

print(response)

```

```{python}

#| echo: false

# save result

f = open('store.pckl', 'wb')

pickle.dump([response_gemini_en,response_gemini,response_en,response]

,f)

f.close()

```